However, since 2012, both HPC and DL have been driving one another. On the one hand, HPC is pushing the limits of DL model complexity and depth of the neural networks for increased model accuracy while simultaneously reducing training time. On the other hand, DL is transforming the way we do science. The quest for knowledge used to begin with grand theories and physics-based models form the core of our understanding of science. However, data-driven models are beginning to outperform physics-based models in specific tasks although several challenges such as model interpretability and generalization remain to be addressed before such models become commonplace.

This page summarizes our research in accelerating machine and deep learning algorithms using parallel computing as well as applying DL techniques for accelerating scientific computing applications.

This project is currently supported by an ONR award and NSF OAC award.

Publications

BERN-NN-IBF: Enhancing Neural Network Bound Propagation Through Implicit Bernstein Form and Optimized Tensor Operations

Recent progress of artificial intelligence for liquid-vapor phase change heat transfer

BubbleML: A Multiphysics Dataset and Benchmarks for Machine Learning

Breaking Boundaries: Distributed Domain Decomposition with Scalable Physics-Informed Neural PDE Solvers

ADARNet: Deep Learning Predicts Adaptive Mesh Refinement

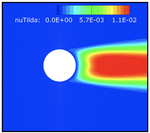

Mosaic flows: A transferable deep learning framework for solving PDEs on unseen domains

SURFNet: Super-resolution of Turbulent Flows with Transfer Learning using Small Datasets

Train Once and Use Forever: Solving Boundary Value Problems in Unseen Domains with Pre-trained Deep Learning Models

Artificial Intelligence and High-Performance Computing: The Drivers of Tomorrow's Science

Brief Announcement: On the Limits of Parallelizing Convolutional Neural Networks on GPUs

CFDNet: A deep learning-based accelerator for fluid simulations

Towards Portable Online Prediction of Network Utilization using MPI-level Monitoring

Portal: A High-Performance Language and Compiler for Parallel N-body Problems

PASCAL: A Parallel Algorithmic SCALable Framework for N-body Problems

Talks

Transferable Deep Learning Surrogates for Solving PDEs

SPCL_Bcast online seminar at ETH Zurich

On the Limits of Parallelizing Convolutional Neural Networks on GPUs

ACM Symposium on Parallelism in Algorithms and Architectures (SPAA)